Learning and Evaluation/Evaluation reports/2013/On-wiki writing contests

This is the program evaluation page for onwiki writing contests. It contains information based on data collected in late 2013 and will be updated on a regular basis. For additional information about this first round of evaluation, please see the overview page.

This page reports data for six program leaders, about a total of eight onwiki writing contests, of which six—from three different language Wikipedias—were mined for additional data. We worked with program leaders, when possible, to confirm specific contest data. You can learn more about response rates and limitations here.

Eight key lessons were learned:

- Onwiki writing contests aim at engaging existing editors, and having fun while developing quality content.

- We need 'improved tracking of inputs for onwiki writing contests. Half of the reported contests had budget data and three reported implementation hours. Program leaders need to increase their tracking of this information, as well as input hours, usernames, and event dates and times for proper evaluation of their contests.

- Program leaders rely heavily on donated prizes/giveaways for contests, followed by materials and equipment.

- Participants create or improve an average of 131 pages during a contest.

- It's hard to track the amount of content added to Wikipedia as part of a contest, since many participants edit both for the contest and in other areas of Wikipedia during the contest time period. Tools for examining time and contest specific articles contributions may be developed to make this possible.

- The longer the duration of a contest, the more quality articles are produced. On average, contests create 28 good articles and 10 featured articles each.

- The majority of participants remain active editors after contests end. However, a slight decline in active editors is seen six months after the contest ends. Additional research should be done to discover why this happens.

- Most program leaders who implement contests are experienced enough to help others, but most don't blog or write about their work. Improved documentation about how to implement contests, and celebrating their outcomes, are needed for others to implement their own contests.

Planning an on-wiki writing contest? Check out some process, tracking, and reporting tools in our portal and find some helpful tips and links on this Resource Page

Program basics and history

edit

On-wiki writing contests are ways for experienced Wikipedians to come together to work together to improve the quality and quantity of Wikipedia articles. Contests usually run for an extended set period of time, from a month to a year, and take place entirely on a Wikipedia. Contests are generally planned and managed by long-term Wikipedians, who develop the concept, subject focus (if any), rules, rating system, and prizes.

The main aim of writing contests is to take existing content, or write new content, and in some cases to develop it into quality or featured articles, producing articles that are among the finest available on Wikipedia. Point systems are often used to gauge the contributions by the participants. Points can be given for the size or quality of an article, or perhaps the addition of a photograph. At the end of the contest period, program leaders review the points and award the winners with online or offline prizes. Sometimes, a jury is brought in to review the submitted articles.

The Dutch Wikipedia was host to the first onwiki writing contest in 2004. The "Schrijfwedstrijd" focused on boosting the number of quality articles on Wikipedia, a goal that would define the entire concept of future onwiki writing contests. Inspired by the Dutch contest, later that year the German Wikipedia held their own writing contest, the "Schreibwettbewerb", which hasbeen held for nine years. Other types of contests have followed over the years, including the WikiCup (which takes place both on the English and German Wikipedia), and attempts at cross-language contests and contests that have been sponsored by chapters[1] or cultural institutions[2].

Report on the submitted data

editResponse rates and data quality/limitations

editWe had a low response rate for online writing contests. One program leader reported on two writing contests directly through our survey. To fill in data gaps, we mined data on six additional writing contests from information available publicly on wiki (read more here about data mining). This data provided us information on program dates, budgets, number of participants, content creation/improvement, and content quality. When possible, we worked directly with program leaders to confirm mined data.

In total, this current report features data from three Wikipedia language versions. We were able to pull limited information about user retention; however, we need more data to learn more. We weren't able to pull data on how much text was added to the Wikipedia article namespace. This is because contests run over extended periods of time, and participants often make edits outside the contest subject areas. As with all program report data reviewed in this report, report data were often partial and incomplete; please refer to the notes, if any, in the bottom left corner of each graph below.

Report on the submitted and mined data

editPriority goals

edit- Onwiki writing contests have five presumed priority goals

We were unable to determine priority goals for writing contests, since we received only one direct report. While that report did include priority goals, one report is not enough to speak for all program leaders, so we have left it out of this report.

However, are able to determine a selection of presumed priority goals based on past logic model session workshops with the community, and the response from the one direct report we received. Those goals are:

- To build and engage the editing community

- To increase contributions

- To increase volunteer motivation and commitment

- To increase skills for editing/contributing

- To make contributing fun!

Inputs

edit- To learn more about the inputs that went into planning a writing contest, we asked program leaders to report on

- The budget that went into planning and implementing the writing contest

- The hours that went into planning and implementing the writing contest

- Any donations that they might have received for the contest, such as a access to a venue, equipment, food, drink, and giveaways.

Budget

edit- Budgets were available for four out of eight onwiki writing contests. The average contest costs US$450 to implement.

We were able to collect budget information for half of the eight reported onwiki writing contests. One of those four budgets reported was zero dollars. The other budgets reported ranged from $400 to $2,000. On average, contests cost $450 to implement.[3][4]

Hours

edit- Volunteer and staff hours were available for four of the contests. Program leaders and their organizing teams put an average of 70 hours into implementing contests.

Staff hours were reported for two of eight contests (25%). One report of was zero hours (no staff hours went into implementing the contest) and the other was 10 hours. Volunteer hours were reported for three out of eight contests (38%) and ranged from 50.0 to 77.5 hours, with an average of 60.0.[5] Combining staff and volunteer hours, total input hours ranged from 50.0 to 77.5 with an average of 70.0.[6]

Donated resources

edit- Donated resources are important for contests. Prizes/giveaways are the most popular donated resource, followed by materials or equipment.

We collected data about donated resources for four out of the eight contests. 75% reported having received donations of prizes/giveaways, followed by half reporting having received donated materials/equipment. None of the four implementations reported food or meeting space – as food and space aren't a necessity for on-wiki editing events, since they generally take place primarily online, instead of in person (see Graph 1).

Outputs

edit- We asked about one output in this section

- How many people participated in the onwiki writing contest?

Participation

edit

- The average on-wiki writing contest has 29 participants.

We collected participant data for all eight reported writing contests. Participant counts ranged from 6 to 115, with an average of 40.[7]

- Contests put an average of just over US$13 and one hour of implementation time towards each participant of on-wiki writing contests.

We received budget data for three on-wiki contests. This data allowed us to learn what the cost per participant was based on the budget inputs. Costs ranged from $9.00 to $74.07 per participant. The average was a cost of $32.14 to fund one participants involvement in a contest.[8]

Three programs also reported hour inputs. This allowed us to learn how much time went into implementing on-wiki writing contests per participant. Reported hours ranged from .67 minutes to 2.33 hours. On average, one hour of input time went into each participant.[9]

Outcomes

edit- On average, contest participants create or improve 131 pages, costing an average of $1.67 USD each.

Unlike our reporting on edit-a-thon outcomes, for this specific program evaluation we chose to focus on how many articles were improved/created through on-wiki writing contests. We decided to report on how many article pages have been created/improved instead of how many characters added. This is because on-wiki writing contest participants will produce characters across the Wikipedia article space outside of their contest work, so the data would be misrepresented regarding characters added.

Seven of the eight (88%) reported contests had data available about how many article pages were created/improved during their on-wiki editing contest. Contest participants created/improved wiki page counts ranging from 22 to 6,374. The average number of pages created/improved was 131.[11]

We were also able to learn, for three programs that reported a non-zero budgets, how much each page costs based on budget input. Dollar per page ranged from $0.40 USD to $3.44 USD. On average, the monetary cost per page was $1.67 USD.[12]

- Image uploads are not an intended outcome for on-wiki writing contests, but half of the contests in this reported did upload images, with 33 on average per contest.

We were curious to know if image uploading was a frequent occurrence in on-wiki writing contests. Four out of the eight program leaders (50%) included image upload data. Upload counts ranged from one to 250. The average upload amount per contest was 33.[13]

-

Graph 2: Dollars to pages, participation, and pages created/improved. This bubble graph displays participation against the dollars per page created/improved. It uses bubble size to depict the total number of pages created/improved for the three contests for which budgets were available along with a report of the number of article pages created/improved. Although these few points appear to associate more pages created/improved with fewer dollars spent, there are too few observations to draw any such conclusion.

-

Graph 3: Dollars to pages created. This box plot shows the distribution of the three contests costs in terms of dollars spent per page created/improved for the contest. The distribution shows the wide variation in dollars spent per page created/improved. As illustrated by the long vertical line running from the low of $0.40 to high of $3.44, results were highly variable for this small set of data. Importantly, this analysis does not measure how many pages of written text were actually produced by these contests.

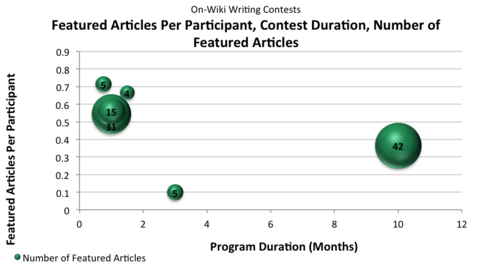

- On-wiki writing contests aim to improve the quality of Wikipedia articles, and succeed at doing it. The longer the contest, the more quality articles, but even the shortest contests succeed at improving the quality of Wikipedia articles.

We asked program leaders to report about the type of quality articles being produced through on-wiki writing contests. We asked about two types of article quality types found in Wikipedia: good articles and featured articles.

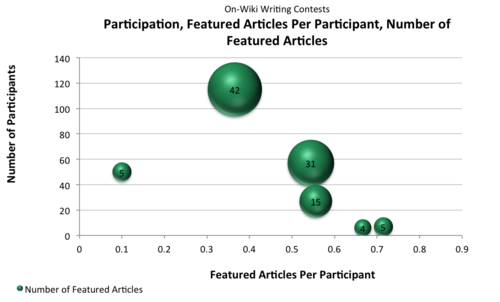

Six contests out of eight (75%) reported how many good articles were produced from their contests. Reports ranged from seven to 436 good articles, with an average of 28 produced (see Graph 4).[14] The same number of contests, six out of eight, reported how many featured articles were created during their contests. Featured articles, which are the highest standard in Wikipedia article quality, ranged from counts of four to 42. Those six contests averaged 10 featured articles each (see Graph 5).[15]

Based on the reported data, we discovered that contests with more articles rated as good or featured lasted the longest (10 months), but, contests that were shorter (3 months) also produced an impressive number of high-quality articles (see Graph 6 and Graph 7) We also learned that the more participants, the more the quality content produced, but, it appears that participants in smaller contests with less participants tend to produce high amounts of quality content, too (see Graph 4 and Graph 5).

-

Graph 4: Participation, good articles per participant, number of good articles. One of the key goals for contests is improving article quality. The bubble graph measures the number of participants and the number of Good articles per participant with the total number of good articles. As illustrated by the bubbles in the graph, the number of good articles per participant ranged from zero to three. The total number of good articles for each contest is illustrated by bubble size and label. Looking at the bubble we can see that, for four of the six contests for which data were available, at least one good article is produced per participant. Although it also appears that as the number of participants increases, so does the number, some of the highest rates for producing good articles per participant occur within the smaller contests.

-

Graph 5: Participation, featured articles per participant, and number of featured articles. This bubble graph examines the number of participants and the number of featured articles per participant with the total number of featured articles. The total number of featured articles for each contest is illustrated by bubble size and label. As illustrated by the bubbles, similar to the rate of good articles per participant as the number of participants increases, the number of featured articles per participant seems to decrease. The four bubbles to the right of the 0.5 mark on the x-axis indicate that for those four contests there was at least one featured article for every two participants.

-

Graph 6: Good articles per participant, contest duration, number of good articles. This bubble graph compares program duration with good articles per participant and the number of articles produced in a contest. We can see that although the program that lasted the longest produced the most good articles, the contests that lasted under four months also produced a considerable amount of good articles.

-

Graph 7: Featured articles per participant, contest duration, number of featured articles. As seen in Graph 6, this article compares contest duration with the number of featured articles per participant. The bubble size depicts the number of featured articles produced. This graph shows a similar story, emphasizing that programs that lasted only one month were still able to produce a large amount of featured articles.

Recruitment and retention of new editors

edit- The majority of on-wiki editing contest participants are existing editors who continue to be active three and six months after the event. However, retention numbers slightly decline by six months, and additional research needs to be done to discover why.

On-wiki Writing Contests are unique in that they target experienced editors. With this in mind, recruitment and retention data for writing contests combines both new and existing editors, and likely illustrates fact that Wikipedia editing is higher in the winter than it is in the summer, as well as the potential importance of writing contests to continuously engage experienced editors in the long-term. We wanted to discover retention and active editor data after contests end, three and six months after (see Graph 8).

Data was available for five out of eight reported (63%) contests for both three and six months after the events. We only had data for those five, because the other three contests had not passed their three and six months post-event time periods.

- Three months after: Retention rates three months after the end of the contests ranged from 60% to 100%. Retention averaged at 81%.[16]

- Six months after: Retention rates six months after the end of the contests ranged from 60% to 86%. Retention averaged 76%.[17]

We need to do additional research to learn about why retention numbers decline slightly six months after (from an average of 83% to 79%), and editor motivations - are contest participants more prone to edit more during the contest itself, or do they go back to "regular" editing habits after the contest ends?

After a 3-month follow up, 60% to 100% of contest participants were retained as active editors, with an average of 83%. Six months later, 60% to 80% of contest participants were retained as active editors, with an average of 79% actively editing six months later. Further research is needed, based on seasonal editing changes (i.e. Wikipedia gets edited less in the summer, versus the winter), but early research shows fewer participants are actively editing six months after the contest. We need to research more to learn if contests do motivate editors to produce more for a specific time period, and then they return to their normal editing activities, or, if contests lead to a declined rate of editing post-contest.

Replication and shared learning

edit- The majority of program leaders who implement on-wiki writing contests are experienced enough to help others implement their own contests. Only a minority of on-wiki writing contests produce blogs or other online information about their implementations.

In terms of steps toward replication and shared learning:

- 88% of the contests were implemented by an experienced program leader who could help others in conducting their own on-wiki writing contest

- 38% had blogged about or shared information online about their contests

Summary, suggestions, and open questions

editHow does the program deliver against its own goals?

editOn-wiki writing contests are community-driven activities that share a simple, yet compelling goal: they aim at improving the amount and quality of content on Wikipedia during a competitive – and sometimes collaborative[18] –, time-limited event.

Writing contests clearly deliver against all three of their goals:

- They engage existing editors by offering a compelling opportunity to compete against each other.

- They are fun as indicated by the fact that some of the contests could continuously attract participants over years.

- They produce high quality content as indicated by the number of articles that attain good or featured article status as a result of the contest.

These results are somewhat unsurprising. Many long-term Wikipedians who belong to the group of "article writers" get recognition through the production of quality content. The more quality content they produce and the higher the quality of their articles, the more social capital they build among their fellow Wikipedia colleagues. Winning a writing contest or even achieving a good results adds additional recognition. At the same time, writing contests offer an opportunity for this specific group of Wikipedians to do what they love most: writing articles. And finally, by offering prices for people who review other participants' articles during the event, some on-wiki writing contests also improve the cohesion among this sub-group of the community.

How does the cost of the program compare to its outcomes?

edit

Writing contests target long-term Wikipedians, most of which have been active contributors to Wikipedia's article namespace for several years. This implies that writing contests attract participants that don't need any kind of "how-to edit" on-boarding. Almost everybody who participates has learned the required skills needed to edit prior to the start of the contest. Also, participants usually don't need any additional assistance or funding for taking part in the event. Contests don't need a venue (as it is the case with edit-a-thons), special technical equipment (as it is the case with photo upload initiatives like "Festivalsommer" that requires participants having cameras to be implemented), or externally funded prizes (as it is the case with most Wiki Loves Monuments events) in order to produce outcomes.[19] Although in at least one case – the German "Schreibwettbewerb" – a local chapter started to support jury members financially through reimbursements of travel costs (in order to make the work of the jury more efficient), most writing contests also don't necessarily require external funding on the organizational level. The WikiCup on the English Wikipedia is a good example for an on-wiki writing contest that has attracted a group of long-time Wikipedians without ever having been funded by an external entity. And in those cases, where external funding has been reported, the amounts of money being spent has been comparably low, ranging between $400 USD and $2,000 USD per event.

Given the low costs and the large amount of quality content contributed to Wikipedia for those writing contests that have been included in this analysis, on-wiki writing contests seem to be one type of programmatic activity that has the best cost-benefit ratio when it comes to article (i.e. textual content) improvement on Wikipedia. Two of the most successful and popular events of this kind – the German "Schreibwettbewerb" and the WikiCup on the English and German Wikipedia – have little or no monetary costs at all (because most of the work of planning and executing the contest is being done by volunteers) while producing large amounts of new and improved articles.

How easily can the program be replicated?

editTo our knowledge, there is currently no documentation available that would describe how to effectively run an on-wiki writing contest. Also, a number of questions remain to be answered. First, it has yet to be analyzed what makes some writing contests (e.g. the WikiCup) more successful than others. Then, it is currently unclear why some communities – like the German – have developed more contest-like activities than others. Finally, it is also unclear why some of the most successful types of this programmatic activity (e.g. WikiCup, Schreibwettbewerb) have not been replicated more widely across language versions (compared to other specific types of programmatic activities like Wiki Loves Monuments).

Next Steps

edit- Next steps in brief

- Increased tracking of detailed budgets and hour inputs by program leaders

- Improved ways of tracking usernames and event dates/times for program leaders to make it easier and faster to gather such data. This will allow us to learn more about user contributions and retention. We hope to support the community in creating these methods.

- Develop ways to track participants contributions that are specific for the contest since participants usually edit content related to the contest, and content unrelated to the contest, during the contest period. This will allow us to learn more about the specific contributions of participants in the article space regarding characters added.

- Explore qualitative data collection which allows us to learn if contests are meeting priority goals related to motivation, skill improvement, and fun.

- Support program leaders in developing "how-to" guides and blogs related their contests for replication purposes

- Next steps in detail

As with all of the programs reviewed in this report, it is key that efforts are made toward properly tracking and valuing programming inputs in terms of budgets and hours invested as well a tracking user names and event dates for proper monitoring of user behaviors before, during, and after events. In addition, it will be key to more carefully track content production efforts of participants specific to contests as they often run alongside editors usual day-to-day participation. Further investigation of expectations and efforts directed toward other goal priorities is needed including examining the potential of contest participation to: increase motivation and commitment, increase skills for editing, and make contributing fun. It will be important to more clearly articulate programming activities specifically aligned to those key goals as well and to develop strategies for measuring, and valuing, such outcomes.

Appendix

editSummative Data Table: On-wiki Writing Contests (Raw Data)

edit| Percent Reporting | Low | High | Mean | Median | Mode | SD | |

|---|---|---|---|---|---|---|---|

| Non-Zero Budgets[20] | 36% | $400.00 | $2,000.00 | $950.00 | $450.00 | $400.00 | $909.67 |

| Staff Hours | 25% | 0.00 | 10.00 | 5.00 | 5.00 | 0.00 | 7.07 |

| Volunteer Hours | 38% | 50.00 | 77.50 | 62.50 | 60.00 | 50.00 | 13.92 |

| Total Hours | 38% | 50.00 | 77.50 | 65.83 | 70.00 | 50.00 | 14.22 |

| Donated Meeting Space | 0% | Not Applicable - Frequency of selection only | |||||

| Donated Materials/ Equipment | 50%[21] | Not Applicable - Frequency of selection only | |||||

| Donated Food | 0% | Not Applicable - Frequency of selection only | |||||

| Donated Prizes/Give-aways | 75%[22] | Not Applicable - Frequency of selection only | |||||

| Participants (b1) | 100% | 6 | 115 | 40 | 29 | 27 | 35 |

| Dollars to Participants | 50% | $9.00 | $74.07 | $32.14[23] | $13.33 | none | $36.38 |

| Input Hours to Participants | 43% | 0.67 | 2.33 | 1.34 | 1.00 | 0.67 | 0.88 |

| Bytes Added | 0% | No data reported | |||||

| Dollars to Text Pages (by Byte count) | 0% | No data reported | |||||

| Input Hours to Text Pages (by Byte count) | 0% | No data reported | |||||

| Photos Added | 50% | 1 | 250 | 79 | 33 | 1 | 117 |

| Dollars to Photos | 25% | $31.75 | $400.00 | Report Count 2 - Not key outcome | $0.00 | $0.00 | $0.00 |

| Input Hours to Photos | 29% | 25.83 | 70.00 | 47.92 | 47.92 | 25.83 | 31.23 |

| Pages Created or Improved | 88% | 22 | 6374 | 1259 | 131 | none | 2309 |

| Dollars to Pages Created/Improved | 38% | $0.40 | $3.44 | $1.84 | $1.67 | none | $1.50 |

| Input Hours to Pages Created/Improved | 25% | 0.07 | 0.38 | 0.23 | 0.23 | none | 0.20 |

| UNIQUE Photos Used | 25% | 3 | 55 | 29 | 29 | 3 | 37 |

| Dollars to Photos USED (Non-duplicated count) | 29% | $0.00 | $36.36 | $18.18 | $18.18 | $0.00 | $25.71 |

| Input Hours to Photos USED (non-duplicated count) | Report count of 1 | ||||||

| Good Article Count | 75% | 7 | 436 | 102 | 28 | none | 168 |

| Featured Article Count | 75% | 4 | 42 | 17 | 10 | 5 | 16 |

| Quality Image Count | Not Applicable | ||||||

| Valued Image Count | Not Applicable | ||||||

| Featured Picture Count | 0% | Not applicable - None reported | |||||

| 3 Month Retention | 63% | 60% | 100% | 81% | 83% | none | 15% |

| 6 Month Retention | 63% | 60% | 86% | 76% | 79% | none | 12% |

| Percent Experienced Program Leader | 88% | Not Applicable - Frequency of selection only | |||||

| Percent Developed Brochures and Printed Materials | 0% | Not Applicable - Frequency of selection only | |||||

| Percent Blogs or Online Sharing | 38% | Not Applicable - Frequency of selection only | |||||

| Percent with Program Guide or Instructions | 0% | Not Applicable - Frequency of selection only | |||||

Bubble Graph Data

editGraph 2.Dollars to Pages, Participation, and Pages Created.

| R_ID | Participation | Dollars to pages | Bubble Size: Number of Article Pages Created or Improved |

|---|---|---|---|

| 62 | 27 | $1.67 | 1200 |

| 20 | 30 | $0.40 | 1000 |

| 58 | 50 | $3.44 | 131 |

Graph 4.Participation, Good Articles per Participant, Number of Good Articles.

| R_ID | Good Articles per Participant | Number of Participants | Bubble Size: Number of Good Articles |

|---|---|---|---|

| 51 | 1.3 | 115 | 436 |

| 52 | 0.6 | 57 | 35 |

| 124 | 0.3 | 27 | 9 |

| 62 | N/A | 27 | N/A |

| 66 | 3.0 | 7 | 21 |

| 68 | 1.2 | 6 | 7 |

| 20 | N/A | 30 | N/A |

| 58 | 2.1 | 50 | 103 |

Graph 5. Participation, Featured Articles per Participant, and Number of Featured Articles.

| R_ID | Featured Articles per Participant | Number of Participants | Bubble Size: Number of Featured Articles |

|---|---|---|---|

| 51 | 0.37 | 115 | 42 |

| 52 | 0.54 | 57 | 31 |

| 124 | 0.56 | 27 | 15 |

| 62 | N/A | 27 | N/A |

| 66 | 0.71 | 7 | 5 |

| 68 | 0.67 | 6 | 4 |

| 20 | N/A | 30 | N/A |

| 58 | 0.10 | 50 | 5 |

Graph 6.Good Articles per Participant, Contest Duration, Number of Good Articles.

| R_ID | Program Duration(months) | Good Articles per Participant | Bubble Size: Number of Good Articles |

|---|---|---|---|

| 51 | 10 | 1.30 | 436 |

| 52 | 1 | 0.61 | 35 |

| 124 | 1 | 0.33 | 9 |

| 62 | 1 | N/A | N/A |

| 66 | 0.75 | 3 | 21 |

| 68 | 1.5 | 1.17 | 7 |

| 20 | 5.5 | N/A | N/A |

| 58 | 3 | 2.06 | 103 |

Graph 7.Featured Articles per Participant, Contest Duration, Number of Featured Articles.

| R_ID | Program Duration(months) | Featured Articles per Participant | Bubble Size: Number of Featured Articles |

|---|---|---|---|

| 51 | 10 | 0.365217391 | 42 |

| 52 | 1 | 0.54 | 31 |

| 124 | 1 | 0.56 | 15 |

| 62 | 1 | N/A | N/A |

| 66 | 0.75 | 0.71 | 5 |

| 68 | 1.5 | 0.67 | 4 |

| 20 | 5.5 | N/A | N/A |

| 58 | 3 | 0.10 | 5 |

More Data

editNote: The Report ID is a randomly assigned ID variable in order to match the data across the inputs, outputs, and outcomes data tables. Program Inputs

| R_ID | Budget | Staff Hours | Volunteer Hours | Total Hours | Space Donated | Equipment | Food | Prizes |

|---|---|---|---|---|---|---|---|---|

| 51 | $0.00 | 77.50 | 77.5 | |||||

| 52 | ||||||||

| 124 | Yes | |||||||

| 62 | $2,000.00 | Yes | Yes | |||||

| 66 | ||||||||

| 68 | ||||||||

| 20 | $400.00 | 10 | 60.00 | 70 | Yes | |||

| 58 | $450.00 | 0 | 50.00 | 50 | Yes |

Outputs: Participation and Content Production

| R_ID | Program Length (months) | Number of Participants |

|---|---|---|

| 51 | 10 | 115 |

| 52 | 1 | 57 |

| 124 | 1 | 27 |

| 62 | 1 | 27 |

| 66 | 0.75 | 7 |

| 68 | 1.5 | 6 |

| 20 | 5.5 | 30 |

| 58 | 3 | 50 |

Program OutcomesːQuality Improvement and Active Editor Recruitment and Retention

| R_ID | d4 UNIQUE Photos Used | c7 Pages Created or Improved | d2 Good Articles | d3 Featured Articles | 3 month retention count | 6 month retention Count |

|---|---|---|---|---|---|---|

| 51 | 3 | 436 | 42 | 85 | 86 | |

| 52 | 6374 | 35 | 31 | 51 | 49 | |

| 124 | 44 | 9 | 15 | |||

| 62 | 55 | 1200 | ||||

| 66 | 40 | 21 | 5 | 7 | ||

| 68 | 22 | 7 | 4 | |||

| 20 | 1000 | 25 | 25 | |||

| 58 | 131 | 103 | 5 | 30 | 30 |

Notes

edit- ↑ Wikimedia Argentina has sponsored the Iberoamericanas contest, which inspired participants from around the world to improve content on Spanish Wikipedia about Latin American women.

- ↑ E.g. the British Museum's featured article prize, which encouraged participants to improve content about British Museum holdings for a chance to win publications from the British Museum bookstore.

- ↑ Averages reported refer to the median response

- ↑ 'Mean = $950, SD=$910.

- ↑ Mean=62.50, SD=13.92

- ↑ Mean=65.83, SD=14.22.

- ↑ Median= 29, Mean=39.88, SD=35.25

- ↑ Median= $13.33, Mean=$32.14, SD=$36.38

- ↑ Median= 1.0, Mean=1.34, SD=0.88.

- ↑ Note: Although "content production" is a direct product of the program event itself and technically a program output rather than outcome most of the program leaders who participated in the logic modeling session felt this direct product was the target outcome for their programming. To honor this community perspective, we include it as an outcome along with quality improvement and retention of "active " editors

- ↑ Median=131, Mean = 1259, SD = 2309

- ↑ Median= $1.67, Mean= $1.84 , SD= $1.50).

- ↑ Mean = 79.25, SD = 117.41

- ↑ Median=28, Mean = 102.83, SD = 167.50

- ↑ Mean = 17, SD = 16.01

- ↑ Median=83%, Mean=81%, SD=15%)

- ↑ (Median=79%, Mean=76%, SD=12%)

- ↑ Since 2010, the "Schreibwettbewerb" on the German Wikipedia has been awarding a price for people who review the participants' articles during the event.

- ↑ Actually, one contest – the "Schreibwettbewerb" on the German Wikipedia – encourages existing community members to donate prizes, which apparently increases the fun factor for the community as a whole.

- ↑ Only 3 report of non-zero budgets

- ↑ Percentages out of 4 who provided direct report

- ↑ Percentages out of 4 who provided direct report

- ↑ Non-zero budgets calculeted for averages.Only 3 reports (one zero-dollar budget).